Who Let the Agents Out?

Building the Safety Foundations for the New Internet

Securing AI agents is a defining challenge of the next decade. It feels as though we’re at base camp - much like when cyber icon McAfee was founded in 1987, soon after the internet first took shape. There is an opportunity to build the founding privacy and security infrastructure for our new internet, an internet where agents are central actors.

Introduction

The early calls that AI was eating the world were a bit premature - we had generic chatbots, agents that missed the mark and overly hyped financial markets. However, this has already changed. Well, we may still have hyped financial markets, but when it comes to progress OpenAI’s o3 marked a step change and introduced a new era of our social and digital lives, reshaping how we work, connect, and create online. Over the next 12–18 months we think that agentic AI will move beyond early adopters, and touch every industry, as companies chase bottom line impact.

AI, and even more so agentic AI, opens up meaningful opportunities for society-improving impacts: AI that gives doctors more time with patients, tools that reduce the admin workload for teachers so they can focus on their profession, and bringing new medicines to market orders of magnitude quicker. Yet, all these uses have significant implications for our privacy and safety.

Agents are AI systems that can plan, remember and take action, independent of a human. This extension from chatbots expands the attack surface, meaning the number of attackable interactions and pieces of data is much larger. When you stack multiple agents on top of one another, you amplify these risks further. Risks such as permissions and unintended access, agent misalignment, prompt injection and data poisoning.

There are two sides to this: on the one hand, as agents proliferate, the cost of weak defence compounds. Yet on the other, AI also boosts our defensive potential: e.g. multiple security agents per worker (a recent Anthropic paper showed AI could outperform humans on some cybersecurity tasks).

We believe this agentic transition demands a new breed of AI-native tools. Traditional security approaches that centred around identity and Zero Trust were built for different actors and a different internet. This AI-native tooling is central to our collective future and is a €100+ billion problem space.

In this deep-dive we look at:

The Arc of Cyber tools - a brief history

The Scale of the Transformation - where agentic AI is accelerating

Quantifying the impact on society - the cost of inaction

Latest Market Dynamics - things are hotting up

Problem Spaces we’re interested in

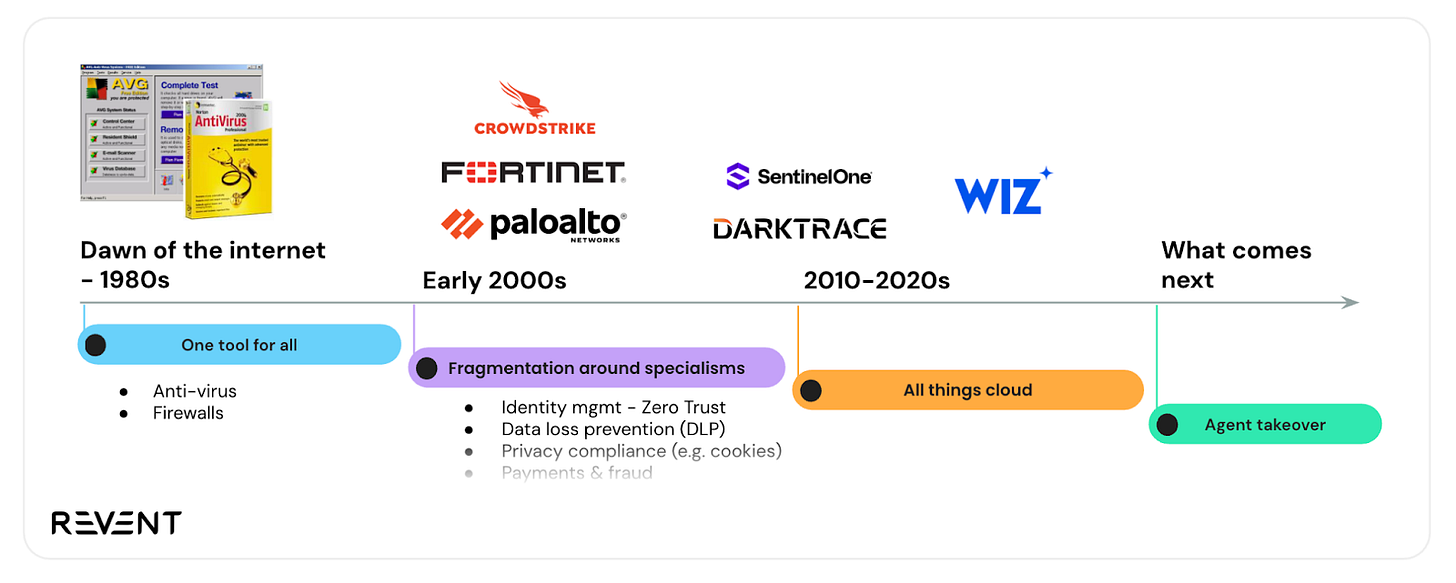

The Arc of Cyber Tools

Over the past two to three decades, we’ve witnessed the rise of the internet as we know it, and with it, the birth of an internet security industry. The first cyber tools arrived soon after the internet itself, evolving into what is now a ~$200 billion market.

Dawn of the internet - the 80s

The term “hack” was first coined at MIT in 1955, with the earliest known examples emerging by phone in the 1960s. Once the internet began to proliferate in the 1980s, the first wave of cybersecurity tools followed. They were primitive (anti-virus software and firewalls) but marked the start of a new industry. It brings back memories of AVG Anti-Virus on Windows 95, its alerts breaking through the low drumming hum of the dial-up connection.

This soon changed, as the online world became more complex.

Fragmentation around specialisms - the 2000s

By the early 2000s, risks had multiplied and new providers were emerging to tackle them. The internet had grown more complex, creating the need for specialisation. Security began to fragment across domains: network, cloud, identity management, DLP, payments, and fraud. What began with access control spread across every layer of the digital stack.

In the early 2000s, the nature of risk looked very different to today. Nearly half of all data breaches (45%) stemmed from lost or stolen physical devices such as laptops and thumb drives (IBM, Cost of Data Breach 2025). As we entered the 2010s the big shift became the transition to cloud computing, and in turn the rise of security giants like SentinelOne (NYSE: S) and more recently, Wiz (acquired by Alphabet for $32bn).

Agent takeover

In 2025 we are experiencing our next leap forward. AI is getting faster, smarter, and cheaper. AI is now doing much of the coding and content development work required to build products. Yes, there’s hype, but we believe that when the hype fades, it reveals a major platform shift - an internet where agents are central actors.

This raises a fundamental question: does this new paradigm demand a different kind of security? Is it just another phase of specialisation, or do agents fundamentally alter how organisations operate and interact with the world?

We think it’s different. This transformation requires a new generation of AI-native defence tools. Traditional approaches, built around identity and Zero Trust, were designed for another era, and for very different actors. Prior to this transition, there was a clear notion of boundaries, around which CISOs could define accesses and controls within a company. However, with agents we lose the clear definition of these operating spaces, as agents operate with vast volumes of data and on platforms outside the company boundary.

The Scale of the Transformation

A recent PwC survey found that more than 75% of large companies are already using agents. OpenAI launched AgentKit this week, a full toolkit for developers and enterprises to build, deploy, and optimise agents. Anthropic with its Claude agent solution launched in August. At the earlier stages, activity is also surging: over 40% of the most recent YC batch was building agentic AI.

The challenge is that agentic AI is fundamentally harder to secure than traditional software. AI systems are brittle, easily broken, and non-deterministic, unlike traditional software, they operate in open environments, drawing on inputs from across the internet, often beyond an organisation’s boundary.

The implications of that are profound. Agents can access and act on your information, making payments, issuing refunds, or sending hiring and firing emails. They represent a new vector of attack. Distinct security problems are emerging that traditional cyber tools were never built to handle: defining agent identity and permissioning, preventing prompt injection, mitigating cascading failures across multi-agent teams. Agents also amplify existing risks, extending the reach of insider threats; malicious insiders already account for the most expensive and frequent data breaches (IBM).

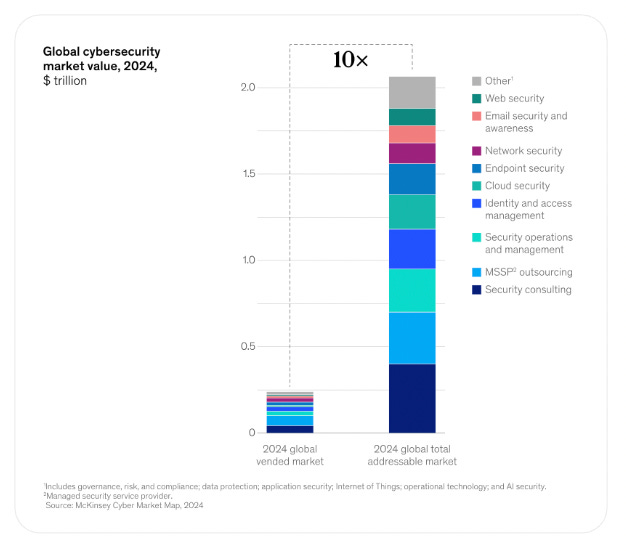

The evidence is clear: safety, privacy and security risks are on the rise. McKinsey estimates the addressable market for cyber tools could grow tenfold from today’s vended market, driven in large part by AI adoption. The World Economic Forum put the global cost of cybercrime at $3 trillion in 2015; by 2025, that figure is expected to reach $10.5 trillion annually. The OECD’s AI Incidents Monitor shows the same pattern: a steady rise in recent years, followed by a sharp spike in 2025.

This shift, from conversational AI to agentic AI, mirrors the move from on-prem to cloud. When cloud adoption began, it unlocked enormous capability but also an entirely new security category: Cloud Protection. Multi-billion-dollar players (Wiz, Sentinel1, Crowdstrike) were born from that transition. Agentic AI is catalysing a similar step-change.

Even the model providers acknowledge this, albeit quietly. We analysed the terms of use, safety hubs and privacy policies from four major LLM and agent platforms (OpenAI, Anthropic, Google and IBM) to see what they conclude you’re exposed to. The pattern is consistent: they warn of prompt injection, uncontrolled data retention, and hijacking risks. They advise you to keep permissions tight and secrets hidden. The fine print makes one thing clear: this is your problem.

Quantifying the Impact on Society

At Revent, we look to invest in solutions to the biggest problems facing society. One way we identify these is by quantifying externalities, i.e. the real costs of inaction. We’ve used this lens across domains from climate change to chronic disease, and it applies equally here.

We ran a quick analysis of the societal cost of data breaches and the potential savings from AI-native security. Using data from IBM’s Cost of Data Breach 2025, we conservatively estimate that deploying AI-native security instead of traditional cyber could prevent €9–10 billion in losses every year.

That’s €10 billion of value returned to society annually, through a combination of fewer breaches, reduced exposure, and faster detection. What this doesn’t even cover are the social costs of reduced privacy, and the safety impacts of physical AI. It’s a reminder that better security isn’t just a technical upgrade. It’s a public good.

Market Dynamics

We see three key trends shaping the current shape of investable opportunities in AI-native security.

One. M&A

In the past 12 months, traditional cyber companies have become increasingly acquisitive in the AI-native security space and they’re moving earlier. Lakera was acquired by Checkpoint for around $300 million, just four years after founding. Haciker was bought by Aikido less than a year after its first institutional round. CalypsoAI was acquired by F5 for $180 million. Other notable acquisitions include Prompt Security and Protect AI.

This is an interesting trend, where we’re likely to see more companies building features getting acquired by incumbents or the emerging AI-native giants. It highlights for us a key question - is this company setting out to build a lasting infrastructure?

Two. Model Providers

The major AI platforms are advertising more prominently their enterprise plan security credentials. For some, like Anthropic, this is core to their DNA, whilst for others (e.g. OpenAI’s Trust Portal), this is largely an evolution driven by enterprise demand and regulation. Frameworks like SOC 2 and the EU AI Act are beginning to put pressure on controls. However, we don’t see these becoming the dominant security offering.

Much like in traditional cyber, where security tools sit across a company’s tech stack, we think agentic AI architecture will remain fragmented. Agentic tooling will blend general-purpose and highly specialised vertical tools. This means security, safety and privacy solutions will need to sit across providers.

Three. Pre-seed Activity

This is a very active moment for ideating founders and early-stage teams in this space, and we think this is the right time to be investing early. We are speaking to brilliant founders.

It’s a unique moment. The market for AI-native security, privacy and safety is infant enough that we don’t yet have dominant monopolistic players, but is also on the cusp of such a massive eruption, that the opportunity ahead is so large. This is a moment to build the security, privacy and safety foundations of our new internet.

Problem Spaces

In short, there’s a lot that needs to be built. We’re at base camp. IBM captured it well: “AI adoption is outpacing oversight… 97% of AI-related security breaches involved systems that lacked proper controls.”.

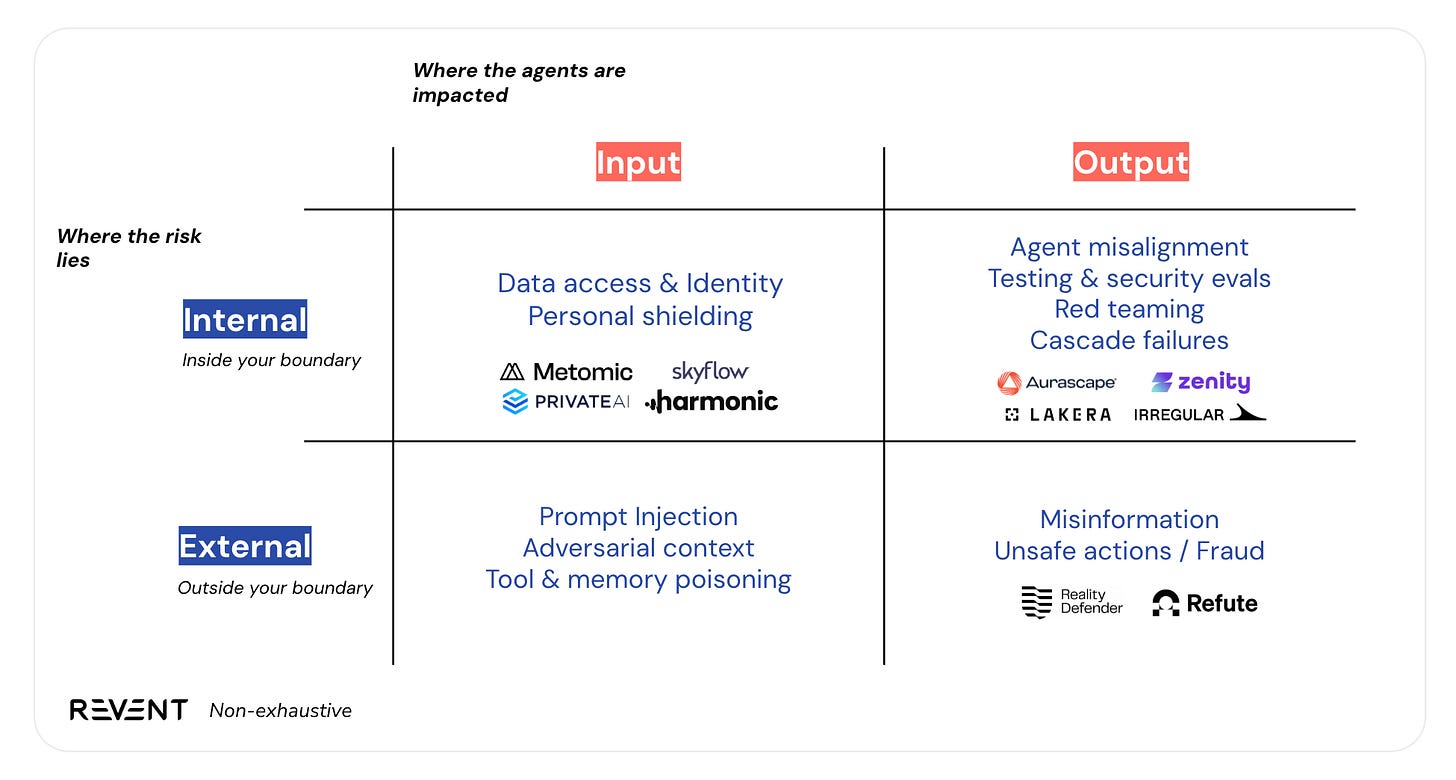

We look at these problem spaces through the lens of safety risks, because we believe teams should go to market by solving these emergent risks. To simplify, we think about AI safety risks across two dimensions:

Where the agent is impacted (in its inputs or outputs)

Where the risk primarily lies (inside or outside the organisation’s boundary)

We have found that this framing helps clarify where vulnerabilities originate, how they propagate through an agent’s lifecycle, and which layers of the defence stack remain underdeveloped.

Right now, we’re seeing the most startup activity in the internal segment, addressing risks within the company boundary. It’s perhaps a lower hanging fruit (a more tangible domain), where you can observe model outputs, test for misalignment, and tune system parameters within the company. Companies like Lakera, Irregular and Metomic are operating in this space, building tools for testing, red-teaming, and evaluation.

We still see a lot of untapped potential in the Internal / Input quadrant. How do we define agent-native identity? What would personal shielding look like at scale?

The External / Input risks (like prompt injection, adversarial context, and memory poisoning) may prove to be the most challenging. As an Anthropic paper puts it, we should expect “a mixture of predictable and surprising developments”, and the surprising will likely come from outside the boundary. Data poisoning (the process of) is one such example which is becoming a much more prominent risk; a recent paper found that an attacker doesn’t actually need to be occupying a high proportion of the models data, that the poison effect can occur from 250 documents, regardless of model size (the paper).

Across all four quadrants, the same conclusion holds, the defensive stack for an agentic world is still in its infancy. The offense is scaling fast, and defence needs to catch up.

In safety-critical industries like nuclear and aviation, they talk about Defence in Depth (thanks Will at Blue Dot); the idea that real resilience comes from layered protection, not single lines of defence. The same principle probably applies here. The risks of an agentic internet will never be solved by one company, one layer, or one technology. It will take multiple teams building across the layers of identity, evaluation, input control, alignment, and so on.

This is a systems problem. One key systems enabler that we’re excited about is insurance. There are many foundational unanswered questions about liability; major insurers are hesitant to evaluate the financial risks associated with AI technologies as the risk market remains very immature.

On the enterprise end, some companies are hesitant to invest until liability uncertainties are somewhat clarified. Currently, the model providers are insured up to a point for catastrophic mistakes, but some believe if this is triggered the insurers would struggle to rally through. AI agent insurance, which gives enterprises the confidence to deploy agents at scale, is an under-explored opportunity; AI Underwriting Company is an early example of what this could look like.

We believe multiple generational companies will be built in this security transition. The founders who thrive will treat safety and privacy not as compliance, but as the foundations of the new internet.

If that’s you, we’d love to hear from you.

This article comes at the perfect time. Your analysis of the expanding attack surface for agentic AI is spot on. Makes me think about trust, even for my personal cycling data. A briliant piece, really.